You collected the data. You ran the tests in SPSS. You got a p-value of 0.04. You celebrated. You submitted the paper… and it was rejected.

The reviewer didn’t say your topic was bad. They said: “The statistical power is insufficient,” or “The data shows signs of P-hacking.”

Welcome to the “Reproducibility Era” of academic publishing.

In 2026, the days of running a simple T-Test and hoping for the best are over. Top-tier journals (Q1 Scopus/Web of Science) are no longer just reading your results; they are auditing your raw data. They use AI-driven tools like StatCheck and Grimmer to instantly detect if your numbers don’t add up.

If you are a PhD scholar struggling to make sense of your data, or if you are terrified of a “Statistical Rejection,” this post is your survival guide.

The New Standard: “Show Your Code”

The biggest shift in 2026 is the Open Data Mandate. Journals like PLOS ONE and Nature now require you to upload your raw dataset and analysis code (R/Python) along with your manuscript.

This exposes you to three deadly risks:

- P-Hacking Detection: If you secretly removed “outliers” just to get a significant result (p < 0.05), AI tools will flag it immediately as manipulation.

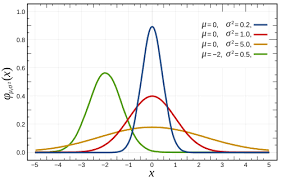

- Model Mismatch: Did you use ANOVA when you should have used Kruskal-Wallis? If your data is non-parametric but you used a parametric test, your entire results section is invalid.

- The “Sample Size” critique: Reviewers are now obsessed with G*Power analysis. If you cannot prove why you chose 200 respondents, they will assume your study lacks power.

It’s Not Just Math. It’s Storytelling.

Statistics is a language. If you can’t speak it fluently, you can’t defend your thesis.

- The Mistake: Dumping huge tables of raw SPSS output into your thesis.

- The Fix: You need to interpret the implications. Don’t just say “The correlation is 0.7.” Say “The strong positive correlation (r=0.7) indicates that Variable A is a predictive driver of Variable B, validating Model H1.”

How McKinley Research “Audits” Your Data

You are a researcher, not a statistician. You shouldn’t lose your degree because of a wrong formula. At McKinley Research, we offer specialized PhD Statistical Consulting & Data Verification.

- The “Pre-Submission” Audit: We run your dataset through the same AI scanners that journals use. If there is an error, we find it before the reviewer does.

- Methodology Defense: We help you write the “Data Analysis” chapter, justifying exactly why you chose SEM (Structural Equation Modeling) over Regression, so you can defend it in your Viva.

- The “Rescue” Service: Stuck with “Insigificant Results”? We don’t fake data. Instead, we help you pivot your hypothesis or use advanced “Post-Hoc” analyses to find the hidden value in your negative results.

Don’t Let a Decimal Point Destroy Your Career

Your research is too important to die in a spreadsheet. Ensure your numbers are robust, reproducible, and ready for high-impact publication.

Struggling with SPSS, R, or Python? Contact McKinley Research today for a Statistical Consultation.